Under the auspices of the Autonomous Systems National Laboratory (ARNL), diversified research is carried out concerning the control of vehicles that belong to a given vehicle-type, as well as concerning the control of vehicles that belong to various different vehicle-types. The latter research area is referred to as cooperative control, an example for this is the ground vehicle – aerial vehicle cooperative control.

It is usually a challenging task to control a heterogeneous group of vehicles (e.g., a human-driven ground vehicle and a drone, or an autonomous ground vehicle and a drone) in a cooperative manner, so that they accomplish some joint mission in a coordinated way. Such a coordinated operation was accomplished and successfully demonstrated on the 1 July by researchers of ELKH SZTAKI (www.sztaki.hu). The coordinated operation involved a human-driven ground vehicle and a UAV.

Such coordinated operation is required for the “forerunner” drone concept – (see also here and here) – within the subproject entitled “FT2: Autonomous near-earth aerial solutions”. This subproject is part of the GINOP-2.3.4-15-2020-00009 project – run by the University of Győr – entitled “Developing innovative automotive testing and analysis competencies in the West Hungary region based on the infrastructure of the Zalaegerszeg Automotive Test Track”. In this mission, a UAV equipped with a downward looking camera accompanies a human-driven vehicle, explores any danger out of the driver’s sight looming ahead, and warns the driver about the danger(s) identified.

In the tested cooperative control, the position and speed data of the ground unit – on-board a ground vehicle, or carried by a moving person – was sent to the UAV via a dedicated wireless communication channel, and the controller on-board controlled the UAV during its flight. In total four test configurations were implemented and looked at during the mentioned cooperative test. These were as follows.

- Tracking a moving person carrying the ground unit with the UAV flying 40 m above the person.

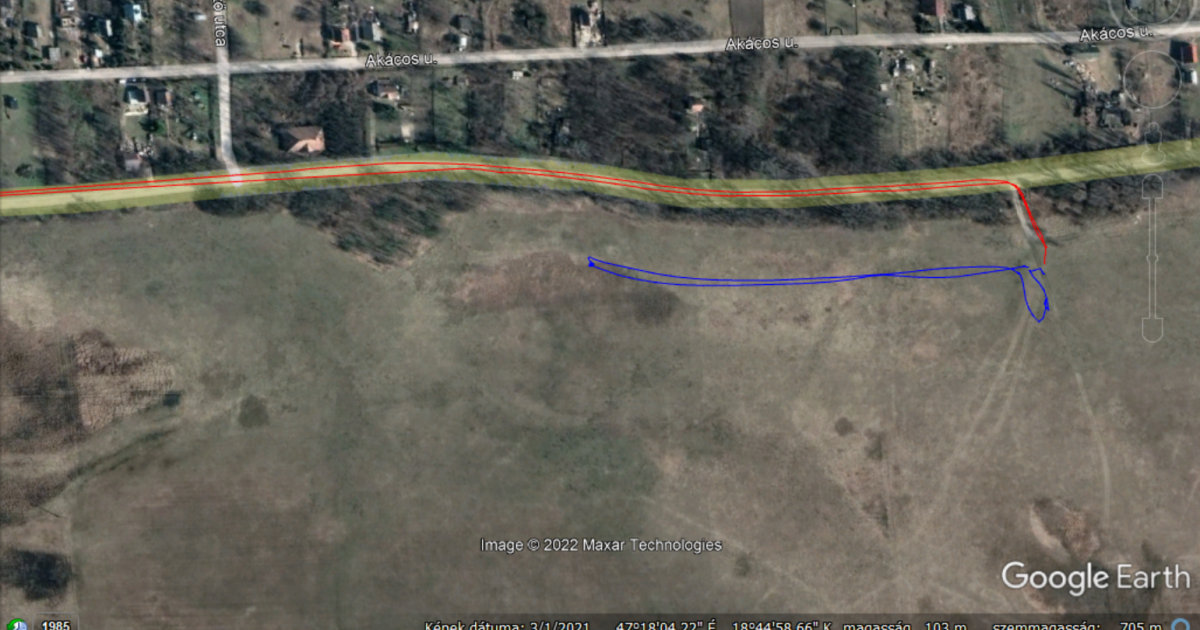

- Tracking a car moving along a road while keeping a constant lateral distance of 50 m to it. This lateral distance makes it possible to keep the UAV in a safe airspace that can be used freely. The longitudinal tracking is not influenced by the lateral offset, the targeted longitudinal offset was 0 m. In this test configuration, the flight altitude of the UAV is 100 m, so that the car can be kept within the field of view of the camera carried by the UAV.

- Similar to the previous configuration, but flying the UAV ahead of the car – at the car’s braking distance – so that the danger warning(s) from the UAV can reach the car in time.

- Similar to the previous configuration, but at a given point ‘pulling’ the UAV above the car. This is required later at crossroads and road junctions, where the driver of the car can divert the car from the pre-planned route.

All four configurations were successfully tested. In case of configurations 2 to 4, the car to be tracked moved with a speed of 20, 30 and finally with 40 km/h. We note here that the maximum speed of the DJI M600 UAV used in the test is 65 km/h in still air. The cooperative mission – even with the highest mentioned speed of the ground vehicle – was accomplished with acceptable precision.

When tracking a person the position error according to the collected GPS data was mostly in the range of 2 to 4 m. This can be verified in the corresponding video: Tracking of a person with multicopter vehicle. The crosshair marking the camera centre tracks the moving person fairly closely. It should be noted that a portion of the visible tracking error may be due to the angular error of the gimbal that holds the camera.

When tracking a car with a speed of 20 km/h the lateral position error – computed from the collected GPS data – was mostly in the range of 1 to 2 m, while the longitudinal position error was about 3 m. With the speed of the ground vehicle reaching 30 km/h and then 40 km/h, the mentioned GPS-based errors were in the ranges of 2 to 3 m and 4 to 5 m, respectively. These errors indicate that tracking task gets more challenging as the ground vehicle gets faster.

Similar to the aforementioned GPS-based tracking errors were computed from the video data which can be viewed in the following videos Ground vehicle tracking with zero offset and Ground vehicle tracking with variable offset. The crosshair indicates the location where the car should be if the longitudinal tracking distance were 0 m.

In the former video, it can be seen that after a short transition period the quality of the car tracking is acceptable: the horizontal line of the crosshair appears fairly close to the car. In case of the latter video, the horizontal line should initially appear ahead of the car, then should appear over it, and finally again ahead of it. These stages in the tracking can be followed and identified in the video. Clearly, if longer road segments were available for cooperative testing, then these stages would be even more distinguishable. We note here that the UAV stops tracking after a while. This is because it reaches the border of the safety pilot’s comfortable line of sight distance. There it waits for the car to return, and then restarts tracking from standstill. At the end of the test, the UAV stops over its initial position and does not track the car turning off the road any further.